We found that the engineering practices that make our system legible to an AI agent are the same ones that reduce toil for our own developers.

This isn't a coincidence; it’s a direct fix for the operational load that kills developer velocity. Our best engineers were constantly being pulled from feature work to put out fires, leading to endless context-switching and burnout.

We built a better, more sustainable environment for our team by enforcing the discipline an AI needs: clear interfaces, minimal abstractions, and fast feedback loops. It turns out, what's good for the agent is good for the engineer.

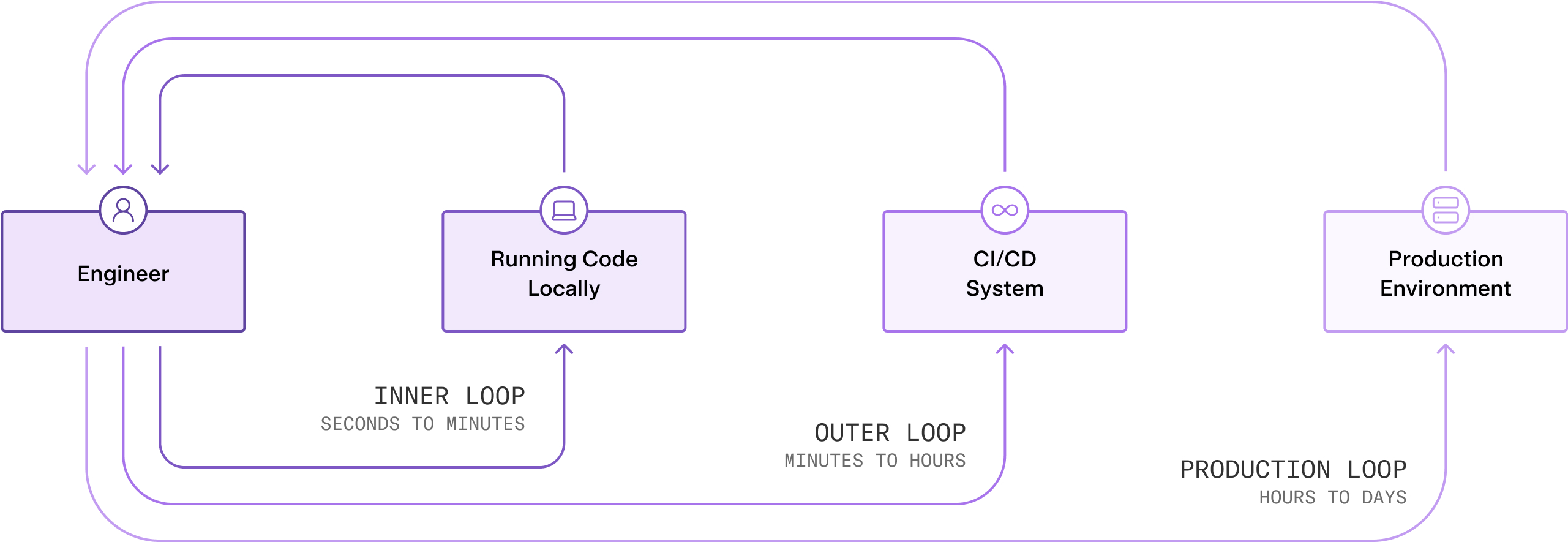

Every dev process runs through three concentric feedback loops:

The cost and complexity of fixing issues explode as you move outward. A syntax error in your IDE takes seconds to fix. A production incident might require hotfixes, rollbacks, and war rooms.

Until GitHub Copilot showed up in 2022, each loop was entirely human-driven. We wrote code character-by-character, spelunked through CI logs manually, and got paged at 3 AM to dig through dashboards. AI is now rewiring each of these loops.

An agent getting lost in a codebase that’s seven abstractions deep is facing the same problem as a new hire. A cryptic alert is useless to both.

So we built our process around a few key principles:

None of this is revolutionary, and that’s the point. For years, we’ve treated these principles as ideals. AI agents treat them as requirements. It turns out the secret to building a great environment for AI is to first build a great environment for your engineers.

In the inner loop, what some call “vibe coding” is becoming standard. And no, this isn't an excuse to turn off your brain and let the AI write spaghetti code. What gets merged is ultimately your responsibility. An AI still needs clear direction, and the best way to provide that is with a well-defined reward function: a solid suite of tests.

But building that test suite first requires you to do the actual engineering work: have a clear product vision, design how new code fits into the existing architecture, and then capture that expected behavior in your tests. Once that framework is in place, then you can prompt your way to passing them.

We use tools like Claude Code and Gemini extensively for this. Sure, the code gets written faster, but the real win is in reinvesting that time in up-front thinking, so that the final product is simpler and more maintainable. Our agents run tests and end-to-end checks as we code, creating the same rapid feedback that helps us stay in flow.

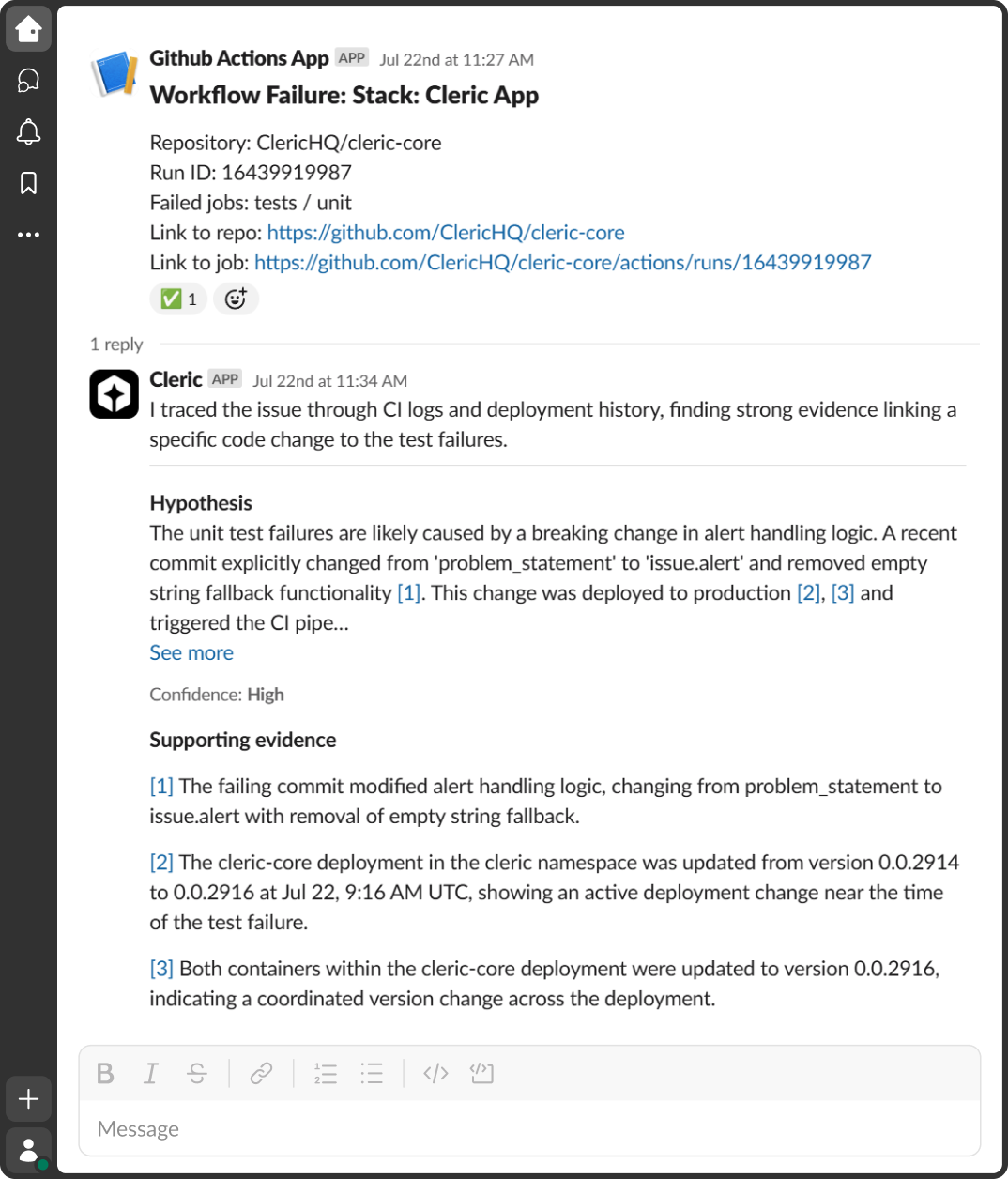

A CI build breaks. The developer stops what they're doing, sighs, and starts digging through logs.

We have Cleric do that first pass automatically. Instead of just a red build, the developer gets a diagnosis:

This turns a 10-minute manual investigation into a 1-minute review. You immediately know why it failed and what to do next. That means fewer interruptions and faster fixes.

Code review is also a great use case in the outer loop. The hardest part of code review isn't catching bugs; it's enforcing architectural principles and reducing accidental complexity. To solve this, we created a simple vibes test in our CI.

Any engineer can add rules to a markdown file in our monorepo called code_requirements.md. It contains simple, plain-English guardrails like:

## Rules

- Do not add new docstrings to code that does not already have them

- The long term vision for the ResponseHandlers are for them to be event driven. Don't add methods that assume synchronous execution.

Our "vibes test" compares every code diff against these requirements, giving engineers a way to check for architectural alignment on demand. Anyone can run it locally while coding to get immediate feedback, ensuring their changes are on the right track long before a pull request is ever opened. It’s a simple way to codify our conventions and keep the codebase consistent, making it easier for both humans and agents to build in the right direction.

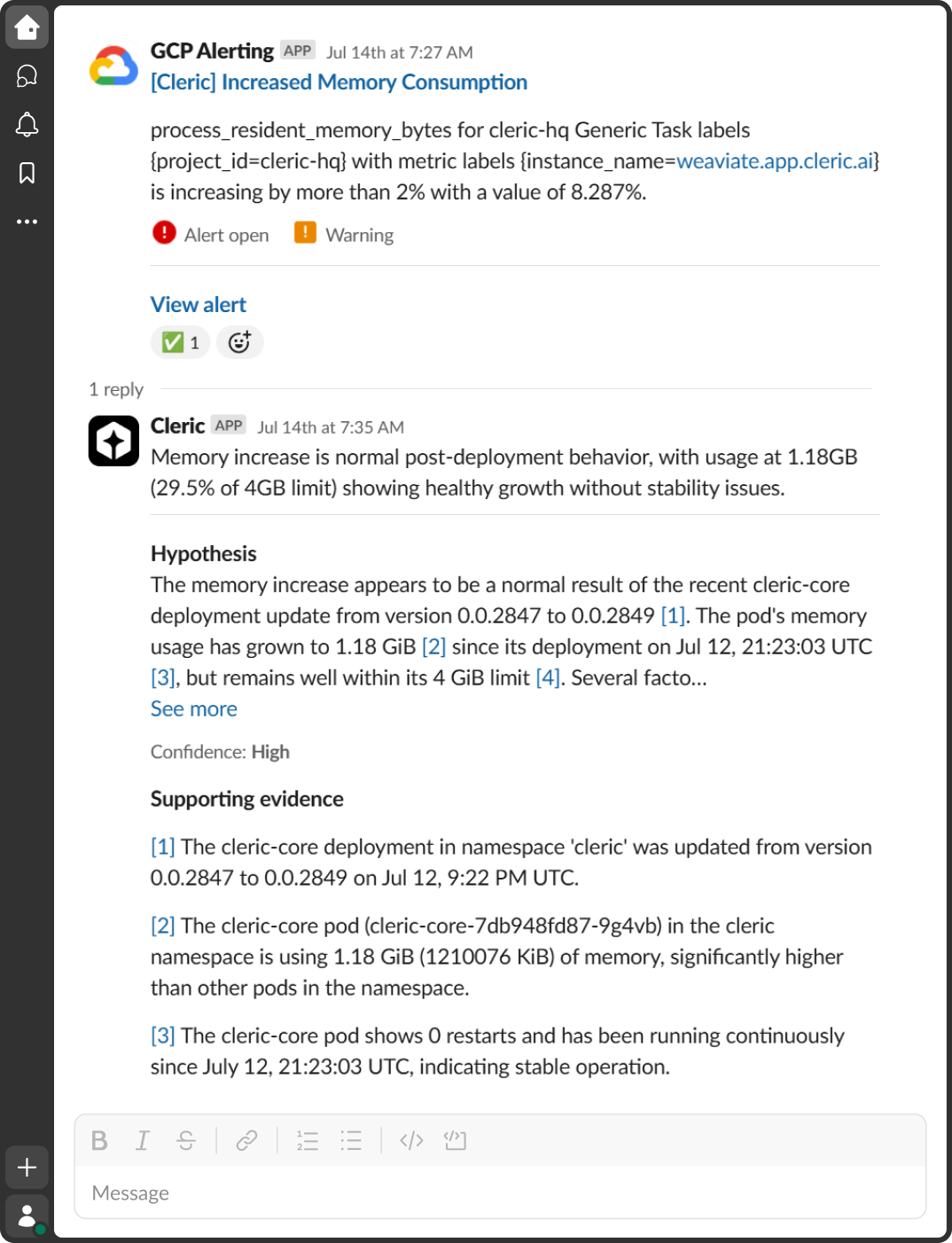

The production loop is the most stressful. Production alerts, especially false alarms, are a constant source of anxiety. So instead of our on-call engineer dropping everything to investigate a scary-looking memory alert, we have Cleric do the first analysis.

In one recent case, we got a memory alert from a customer’s instance. Cleric determined it was normal post-deployment behavior and confirmed the system was stable. Our engineer scanned the report in 30 seconds, confirmed it was a false alarm, and got back to coding.

For real incidents, the AI doesn’t just alert - it investigates. Instead of a cryptic error, engineers get a hypothesis of what could have gone wrong backed by evidence.

The fix stays in the engineer’s hands, but the detective work disappears.

The old ideal of staying small and lean is more achievable than ever. Using AI as a force multiplier lets us build a team with a high signal-to-noise ratio. This lets us operate as a focused group of domain experts who can dedicate their time to high-value problems, not operational noise.

Platform teams can focus on architecture, not alert triage. Application engineers can focus on complex business logic and innovation. Both are supported by an AI assistant that handles the rote investigation.

The future of engineering is a human-agent collaboration that closes the loop between idea, code, and impact. It gives engineers what they crave most: uninterrupted time to actually build.